The size of the billed bytes from Prometheus remote write can be higher than the bytes sent to New Relic. To make sure you're not surprised by the difference, we recommend you review how data compression affects billed bytes.

Understand data compression and billing

Prometheus remote write data is sent to New Relic compressed for faster, lossless transmission. When ingested, that data is uncompressed and decorated so that it can be properly used with New Relic features, such as entity synthesis. Although you should expect a difference in the compressed to uncompressed byte rate, the potential difference for Prometheus Remote Write data is important because of New Relic’s billing model.

You're billed based on the computational effort needed to ingest your data, as well as the size of the data stored within New Relic. The decompression process and data transformations can result in the final uncompressed bytes stored being around 15x the size of its compressed counterpart.

For example, based on a sampling of time series data we gathered when simulating real world traffic, you might see something like this:

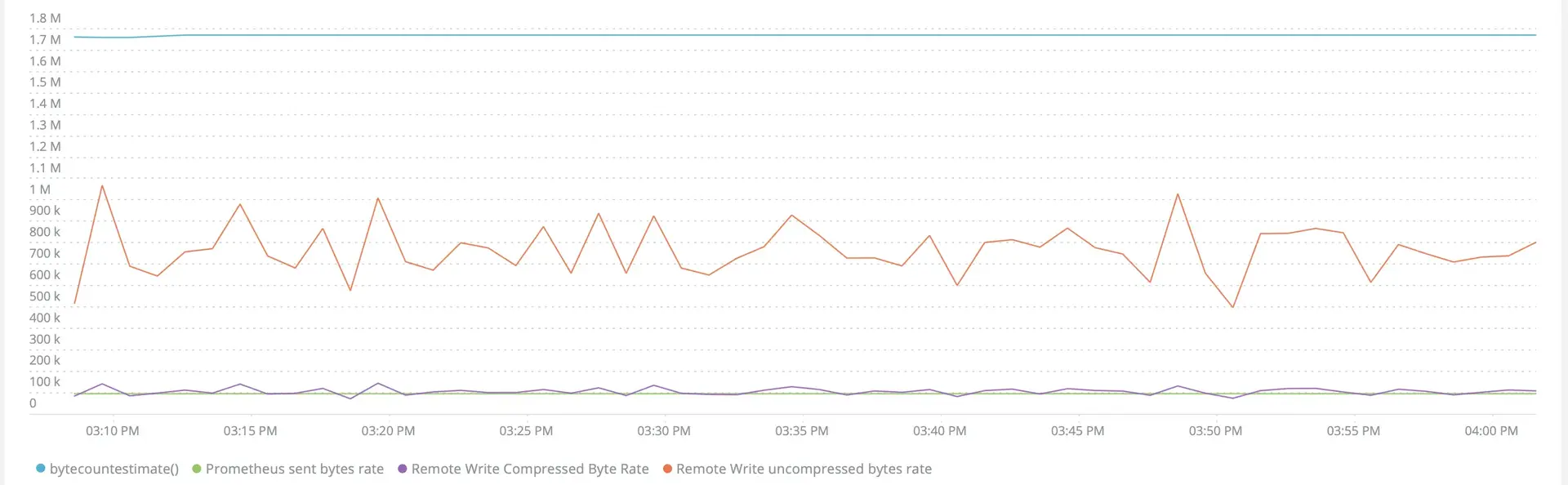

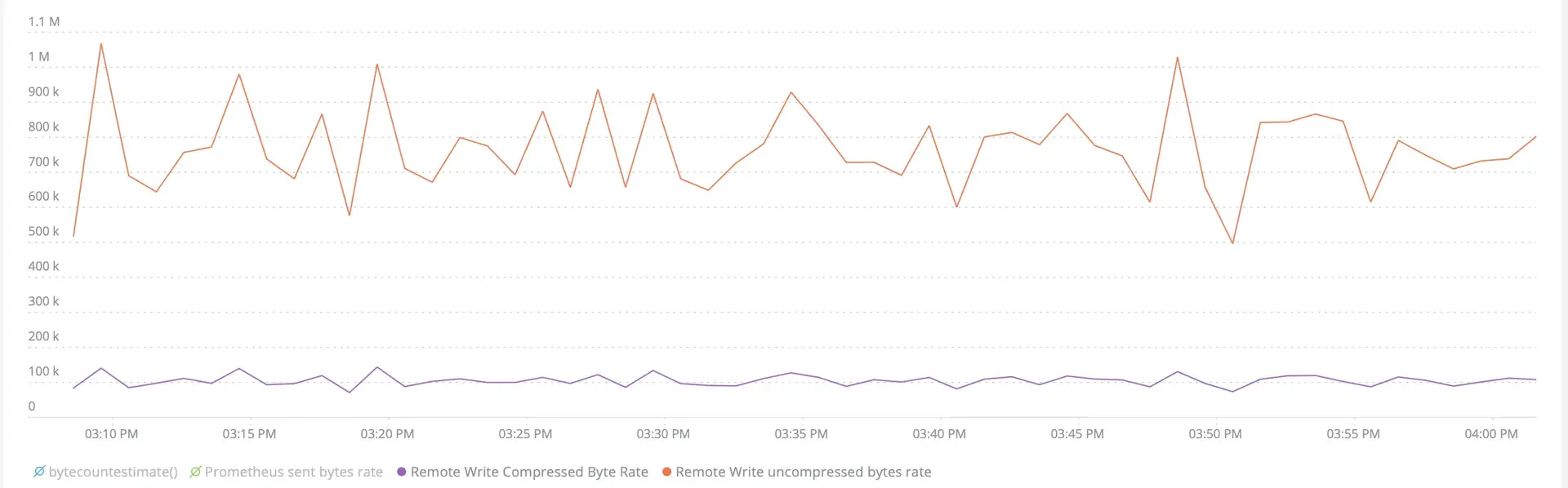

~124 GB/day compressed data sent = ~1.86TB uncompressed data storedBelow is a simulation of the byte rate changes as Prometheus read-write data moves through our system. In this case, metrics were generated by ingesting a local Prometheus server’s remote write scrape of a local node-exporter.

Note how the Prometheus sent byte rate closely matches the remote write compressed bytes count that we record on our end just before uncompressing the data point(s). We can attribute the increased variance of the remote write compressed byte rate to the nature of processing the data through our distributed systems:

As the data points are uncompressed, the 5-10x expansion factor is reflected in the difference between the remote write compressed data byte rate and the remote write uncompressed bytes rate, which are measurements taken right before and after data decompression.

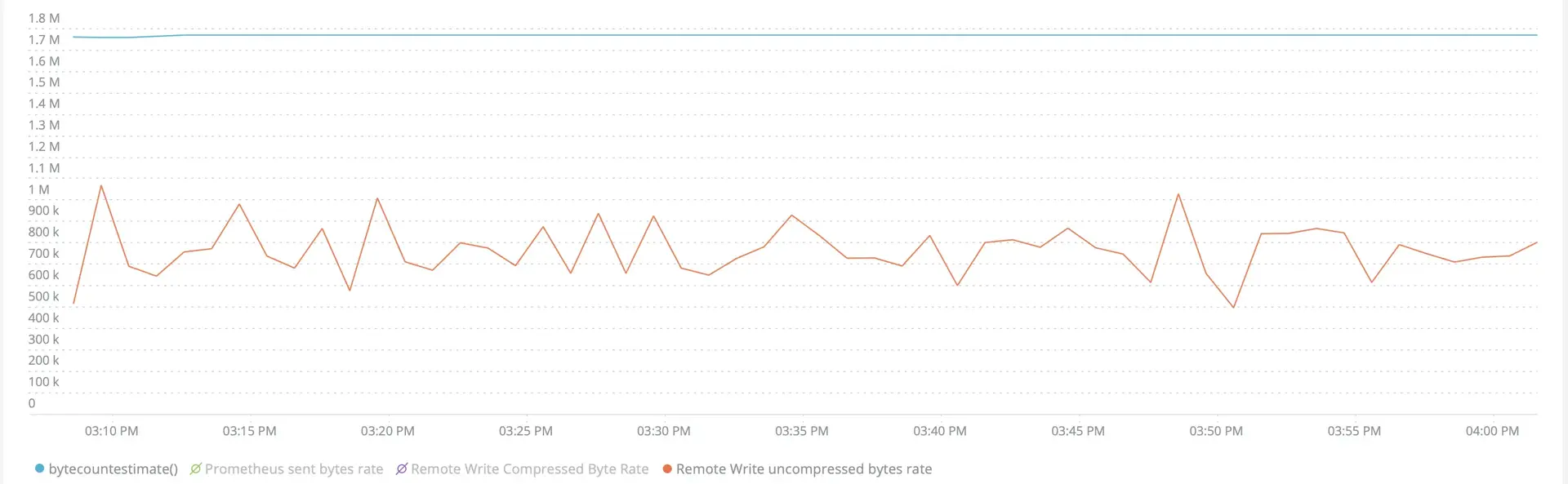

Finally, as the data is transformed and enrichments are performed, the difference between the remote write uncompressed bytes and the bytecountestimate() can be seen below. The bytecountestimate() listed is a measure of byte count of the final state of the data before being stored.

To give a better understanding of the possible data transformations/additions Prometheus read-write data can go through, here's comparison of the prometheus_remote_storage_bytes_total metric, a measure reported by the Prometheus server.

The first is a representation as given by Prometheus, and the second is the NRQL query counterpart.

Prometheus server representation:

"prometheus_remote_storage_bytes_total" { "instance=""localhost:9090" "job=""prometheus" "remote_name=""5dfb33" "url=""https://staging-metric-api.newrelic.com/prometheus/v1/write?prometheus_server=foobarbaz"}23051NRQL query representation

{ "endTimestamp": 1631305327668, "instance": "localhost:9090", "instrumentation.name": "remote-write", "instrumentation.provider": "prometheus", "instrumentation.source": "foobarbaz", "instrumentation.version": "0.0.2", "job": "prometheus", "metricName": "prometheus_remote_storage_bytes_total", "newrelic.source": "prometheusAPI", "prometheus_remote_storage_bytes_total": { "type": "count", "count": 23051 }, "prometheus_server": "foobarbaz", "remote_name": "5dfb33", "timestamp": 1631305312668, "url": "https://staging-metric-api.newrelic.com/prometheus/v1/write?prometheus_server=foobarbaz"}Sugerencia

The above example is a simplified comparison meant to illustrate the underlying concepts, so it makes lighter than average use of labeling and featured metrics. The real-world versions will look a little different because they have more complexity.

Use NRQL to query your data count

Check the following NRQL queries to gather byte count information.

To view estimated byte count stored at New Relic:

FROM Metric SELECT rate(bytecountestimate(), 1 minute) AS 'bytecountestimate()' WHERE prometheus_server = INSERT_PROMETHEUS_SERVER_NAME SINCE 1 hour ago TIMESERIES AUTOTo monitor Prometheus bytes sent to New Relic:

FROM Metric SELECT rate(sum(prometheus_remote_storage_bytes_total), 1 minute) AS 'Prometheus sent bytes rate' WHERE prometheus_server = INSERT_PROMETHEUS_SERVER_NAME SINCE 1 hour ago TIMESERIES AUTOExternal references

Here are some external links to Prometheus and GitHub docs that clarify compression and encoding.

Prometheus referencing Snappy Compression being used in encoding: The read and write protocols both use a snappy-compressed protocol buffer encoding over HTTP. The protocols are not considered as stable APIs yet and may change to use gRPC over HTTP/2 in the future, when all hops between Prometheus and the remote storage can safely be assumed to support HTTP/2.