To provide more context-specific answers, New Relic AI can use a technique called Retrieval Augmented Generation (RAG) through the New Relic AI knowledge connector. While New Relic AI LLMs have a vast general knowledge, RAG enhances their responses.

By setting up the New Relic AI knowledge connector, you can expect tangible outcomes such as faster alert event resolution, more accurate and context-aware AI responses, and reduced manual searching across multiple documents. This unified approach helps your team make better decisions and respond to issues more efficiently.

Use cases and value

The following examples show how the knowledge connector integration helps tackle challenges like fragmented documentation and slow alert event response by surfacing relevant information.

Key features

With New Relic AI knowledge connector, you can:

Gain access to relevant context from your internal runbooks and documentation directly within New Relic AI, leading to quicker mean time to resolution (MTTR).

The answers provided are specific to your environment and based on your own best practices and historical data.

Easily find solutions to problems that have been solved before. Ask questions like:

- "Who has resolved similar issues in the past?"

- "What are the standard triage steps for this type of alert?"

- "Show me the runbook for a

database connection limit exceedederror."

Importante

At this time, all indexed documents can be retrieved by all users within your organization's New Relic account. Please verify that only appropriate content is indexed, as there's currently no option to restrict access or redact information after indexing.

How it works

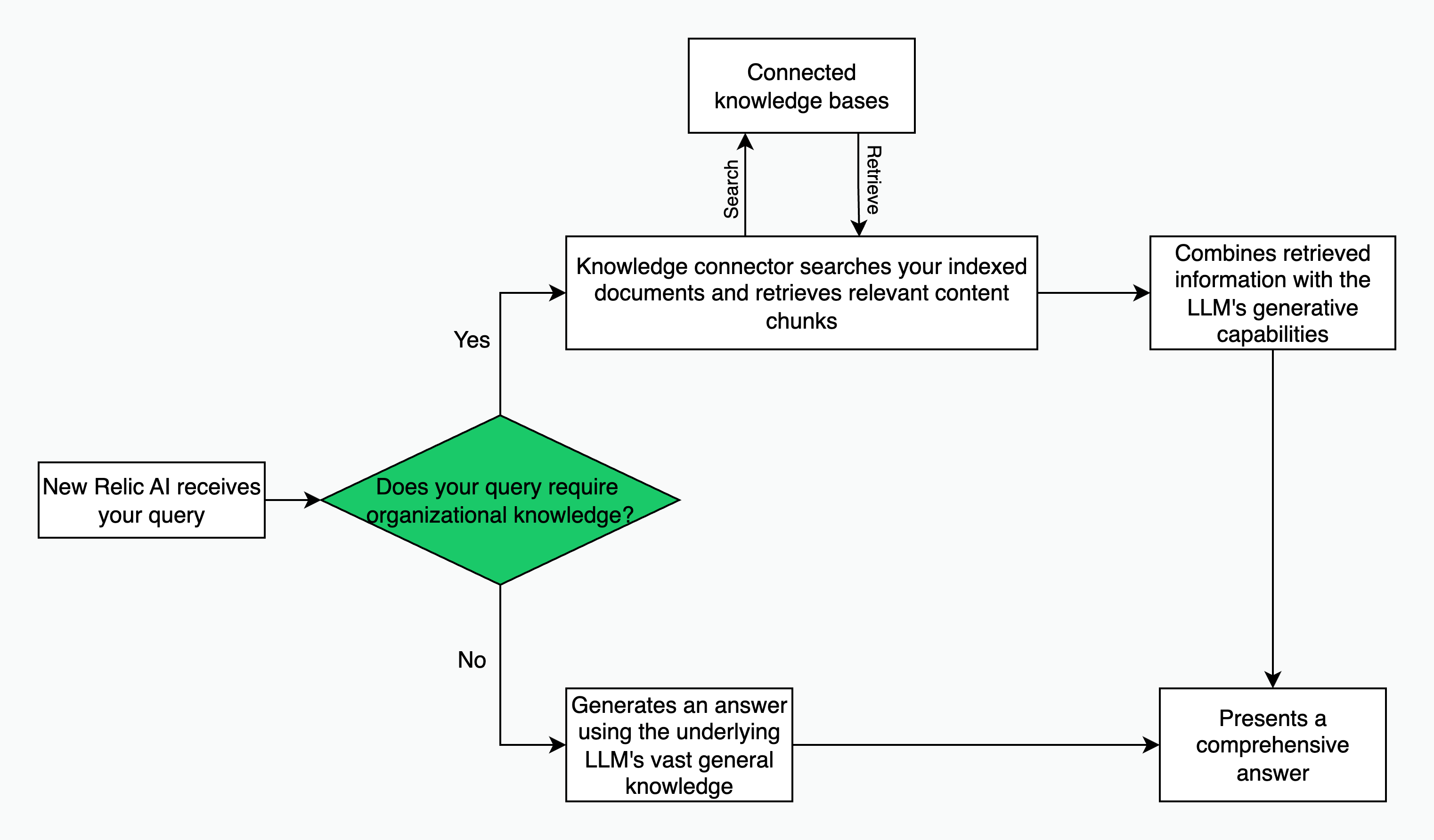

The knowledge connector securely integrates with your content and knowledge bases, such as Confluence, to enhance New Relic AI's responses with your specific organizational knowledge. The process follows these steps:

Index: Once your knowledge bases are connected to the New Relic AI platform, the knowledge connector performs an initial indexing of your documents. You can configure this process to run on a recurring basis, ensuring that New Relic AI always has access to the most up-to-date information as your documents evolve.

Retrieval: When you ask a question in New Relic AI, the system searches the indexed content for the most relevant information. This step ensures that the context is pulled directly from your trusted, internal documentation.

Generation: Finally, the system combines the retrieved information with the powerful generative capabilities of the underlying LLM. This synthesis produces a comprehensive and context-aware answer, grounded in your specific data and best practices.

If a query doesn't require your organizational knowledge, New Relic AI will generate an answer using the underlying LLM's vast general knowledge. In both cases, the goal is to provide you with the most relevant and accurate information possible.

Prerequisites

Before you begin using the New Relic AI knowledge connector, ensure that:

- Only documents suitable for organization-wide access are indexed.

- Sensitive information is redacted.

- All the documents to be indexed comply with your organization's internal data security and privacy policies.

- New Relic AI is enabled for your account.

- Appropriate user permissions are configured for indexing (you'll need the “Org Product Admin” role, which allows you to perform actions—such as set up and manage the knowledge connectors—that may have future billing implications).

You have two options to assign the Org Product Admin role:

- Apply to an existing user group: Add the Org Product Admin role to an existing group of users who will be responsible for managing the knowledge connectors.

- Create a dedicated group: For more granular control, create a new user group specifically for this purpose and assign the Org Product Admin role to that group.

This flexibility allows your organization to control who has the ability to manage indexed content.

Configure data source and indexing frequency

You can connect your knowledge sources using either pre-built connectors or the knowledge connector API for more custom integrations.

Best practices for optimizing your knowledge source content

To maximize the effectiveness of New Relic AI's ability to surface relevant information, provide accurate answers, and accelerate alert event resolution, it's crucial to structure your internal documentation with the AI in mind. While these guidelines focus on alert event retro documents for quick and efficient alert event management, the principles apply broadly to other knowledge types. The knowledge connector performs best when your source documents are clear, consistent, and rich in specific details relevant to their intended use.

Follow these best practices when creating and maintaining your retro documents and other knowledge base articles to ensure New Relic AI can optimally leverage the indexed information:

Descriptive title: Ensure each document has a clear and descriptive title. This helps New Relic AI fetch the closest matching content based on alert titles and user queries. For example, "Memory Leak in Service X during v2.3 Deployment" is more effective than "System Slowdown."

Alert event summary: Begin retro documents with a brief, high-level summary of the alert event.

Customer impact details: Include specific details about the customer impact (for example, number of affected users, services degraded, financial impact) to help first responders and the AI gauge severity.

Entities affected and downstream entities: Document the specific services, microservices, databases, or other infrastructure entities directly affected by an issue, as well as any known downstream entities impacted.

Why did it happen (root causes): Clearly articulate the identified root cause(s) of an issue, avoiding ambiguity. Be specific. For example, "Memory leak due to recent deployment v2.3" is more effective than "System became slow."

Mitigating actions: Detail the specific, actionable steps taken to mitigate and ultimately resolve an issue. This allows New Relic AI to suggest proven fixes for similar future issues.

Future prevention: Outline long-term prevention strategies, follow-up tasks, and improvements identified to avoid recurrence of similar issues.

Tag related entities/services: While direct entity impact in RAG is an evolving feature, tagging related entities and services within your document is crucial for future enhancements to accurately surface the right blast radius and relevant information.

Teams involved (who fixed it): List the specific teams or departments who worked on an issue, especially those who played a crucial role in diagnosis or resolution. This helps New Relic AI direct first responders to the right experts.

When did it happen: Include the precise timestamp of an alert event's start.

Which alert triggered it: Specify the exact alert or condition that initially triggered an alert event response.

How long was the alert event: Document the duration of the alert event from detection to resolution.

Participants in alert event: List individuals who actively participated in the alert event resolution process.

Owning team: Clearly identify the team responsible for the service or component where the alert event originated or the team owning the resolution.

Regularly update and review: Ensure your knowledge connector is configured to run on a recurring basis, and make it a practice to regularly review and update your source documents. This ensures New Relic AI always has access to the most up-to-date and accurate information.

To start indexing Confluence content, you need:

- Confluence URL with your unique Confluence cloud ID: https://api.atlassian.com/ex/confluence/[cloud_id]

- A Confluence API key with the following minimum required scopes:

read:confluence-content.allread:confluence-space.summaryread:content:confluenceread:content-details:confluence

- Relevant query parameter using Confluence Query Language (CQL) to filter the list of returned content to be indexed

To start indexing your content and benefit from the knowledge connector with New Relic AI, follow these mentioned steps:

Navigate to one.newrelic.com > Integrations & Agents.

Search for NRAI Knowledge Connectors.

Select one of the available connectors.

Enter the connector details such as:

Field name | Description |

|---|---|

Connector name | Enter a unique name for your connector (example, Demo Connector). |

Knowledge category | Select the knowledge category from the drop-down list, this will help New Relic AI to look for information in the right place. |

Click Continue.

On the Data source authentication page, enter the required information to authenticate your data source. Click Continue.

On the Configure data source page, enter the required information and define which documents need to be fetched at what frequency. Click Create.

On successful configuration, you’ll see the status of your connector on the Connector overview page.

Field name | Description |

|---|---|

Status | Shows you if the data source is available to New Relic AI |

Last Synced | Shows when the data was last updated |

Syncing | If this option is turned off, no new data updates will occur. Existing data will remain unchanged. |

Delete Connector | Deleting a connector will delete all indexed data. |

Data synchronization and querying

Once your data source is connected, New Relic will begin to synchronize and index your knowledge articles. After the initial synchronization is complete, your team can begin asking questions through the New Relic AI chat. Additionally, New Relic AI will automatically use the knowledge connector tool to search your indexed documents and provide contextual responses on the “What happened previously?” portion of the issue page.

Supported connectors

important

If you’d like to make a request for an unsupported connector, fill out this form.

Here are the supported connectors:

Connector | Purpose |

|---|---|

Confluence | Connect with your retrodocs to understand “How were similar issues resolved in the past?” |

Custom Documents | To index files such as pdfs, csv, txt, etc. |